Articles

Dec 16, 2025

Building Scalable Furniture Visuals with AI Systems

Written by

Studio.Prompted

As furniture brands expand across e-commerce, marketplaces, and campaigns, the demand for high-quality visual content grows just as fast. The challenge is no longer producing a single good image, but maintaining consistency, flexibility, and speed across hundreds or thousands of products.

Traditional photography and 3D rendering workflows often struggle at this scale. They are expensive to update, slow to adapt, and difficult to reuse across changing collections. AI changes this equation, but only when it is applied as a system rather than as a one-off image generator.

This article breaks down how a structured AI workflow can be used to create scalable, on-brand furniture visuals that work across e-commerce, marketing, and product development.

What does an AI furniture content workflow involve?

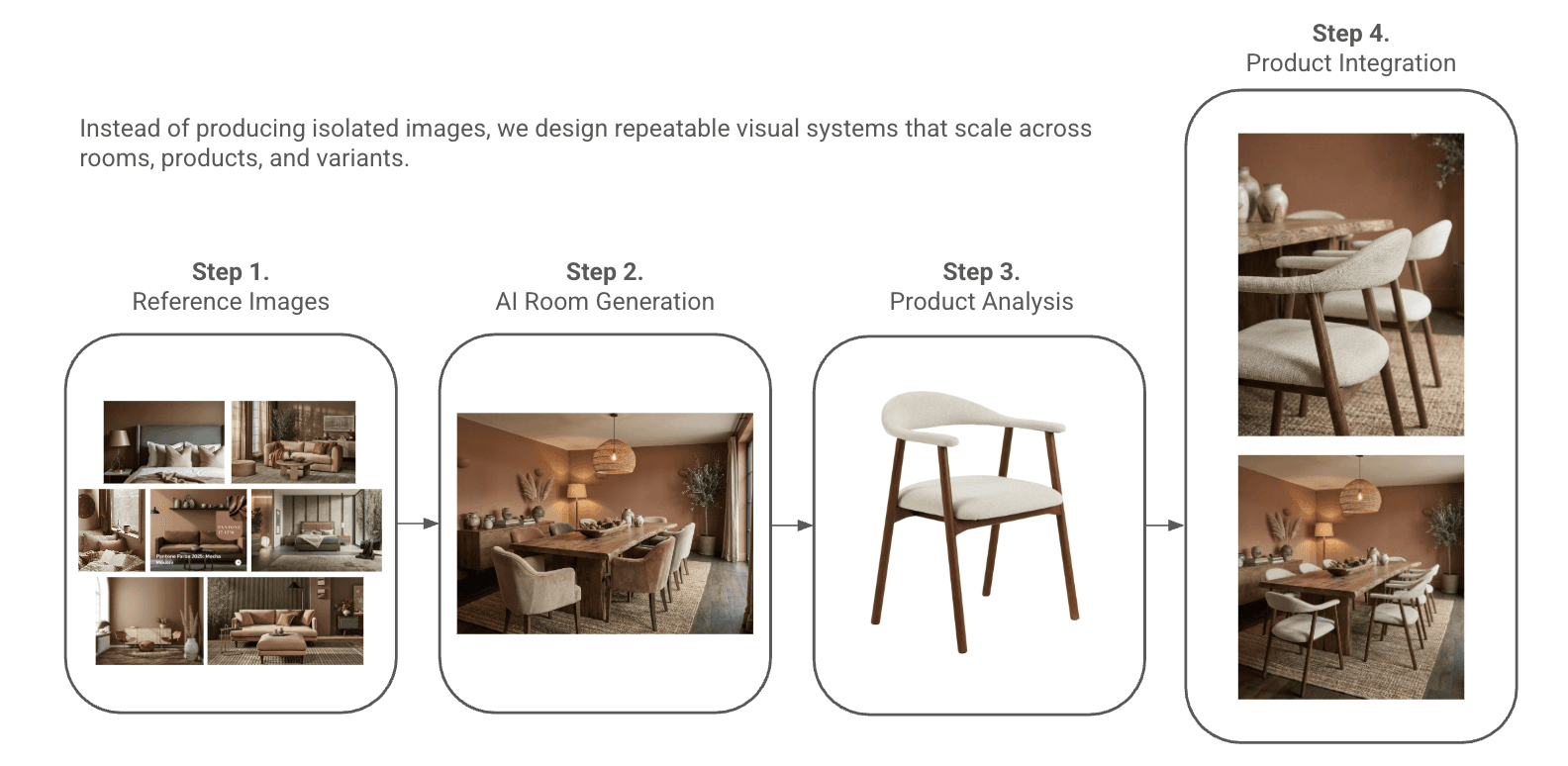

An AI-based furniture content workflow focuses on building reusable visual systems instead of isolated images. Rather than generating individual scenes from scratch each time, the process starts by defining environments, lighting rules, camera logic, and brand-specific visual constraints.

From there, existing product images or catalog assets are integrated directly into these environments. The same room can be reused across multiple products. The same product can be shown from multiple angles. The same visual language can scale across categories without reinventing the process each time.

Typical tasks within this workflow include:

Creating consistent room environments based on mood boards

Defining camera angles and framing rules that work across products

Integrating real product imagery into AI-generated scenes

Generating multiple viewpoints from a single source image

Enhancing and upscaling existing assets for modern e-commerce standards

The result is a visual system that behaves more like a production pipeline than a creative experiment.

From mood boards to image systems

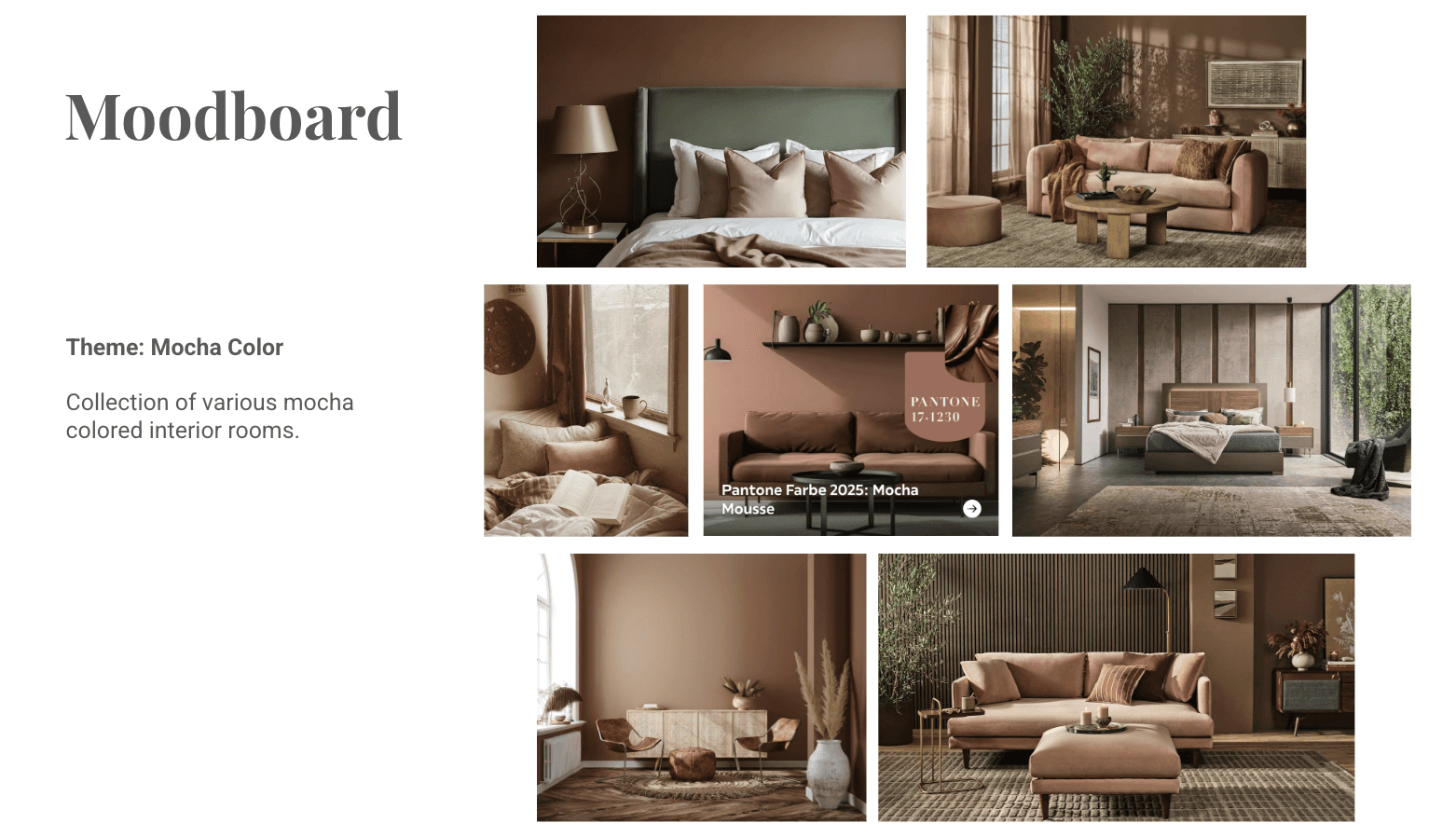

Every scalable furniture workflow starts with a mood board. Not as inspiration, but as a visual contract.

The mood board defines the non-negotiables: color palette, materials, light quality, atmosphere, and emotional tone. In this case, the direction is clear and intentional: mocha tones, warm woods, soft textiles, diffused natural light, and quiet editorial compositions.

This step matters because AI does not invent taste. It amplifies whatever structure you give it. Without a mood board, outputs drift. With one, the system gains visual gravity.

The mood board becomes the reference layer everything else inherits from.

Turning environments into prompts

Once the visual language is locked, the system moves from references to environments.

Instead of prompting for a product directly, the workflow first defines the room. A living room is described in detail: materials, layout, lighting direction, furniture density, and spatial balance. This description is not aesthetic fluff. It is a production brief.

From that room description, a structured prompt is generated. The prompt is not creative writing. It is a technical translation of the mood board into something a model can reliably execute.

Because the prompt is derived from the same visual references every time, the resulting images remain consistent even when regenerated in different sessions or variations.

This is where randomness stops and repeatability begins.

Generating the base scene

The generated room image acts as a base world. Think of it as a digital set rather than a final asset.

At this stage, the image is evaluated for composition, lighting logic, depth, and realism. The goal is not perfection, but usability. The scene needs to support products without competing with them.

Once a strong base scene exists, it becomes reusable. The same environment can support multiple products, campaigns, or seasonal updates without being regenerated from scratch.

This is the shift from image creation to scene ownership.

Integrating real products into AI worlds

With the scene established, real products are introduced.

Existing product imagery from a client’s website or catalog is uploaded into the system. These products are then prompted into the generated environment, respecting scale, perspective, lighting direction, and material response.

This step is critical. The product does not get stylized to match the room. The room adapts subtly to support the product. Shadows, reflections, and contact points are adjusted so the object feels physically present.

Because the environment is controlled, this integration can be repeated across multiple products with predictable results.

Expanding with camera logic, not new scenes

Once a product is placed into a scene, the system no longer needs new environments. It needs new viewpoints.

Different camera angles are generated from within the same world: wider lifestyle shots, tighter detail crops, lower angles, and more editorial framings. All of these variations inherit the same lighting, materials, and atmosphere.

This is where scale becomes exponential. One environment supports many products. One product supports many angles. One system feeds e-commerce, campaigns, and social formats simultaneously.

No reshoots. No re-rendering entire rooms. Just controlled variation.

Why this matters for e-commerce teams

E-commerce teams are under constant pressure to produce more content with fewer resources. New products, new formats, new platforms. The visual workload grows, but the timelines do not.

A structured AI workflow reduces friction in three key ways:

Speed: New visuals can be generated in hours instead of weeks

Consistency: Every image follows the same visual logic

Flexibility: Products can be repositioned, re-angled, or re-contextualized without restarting production

This is especially valuable for brands managing large catalogs, frequent launches, or multiple regional markets.

AI as infrastructure, not replacement

This workflow is not about replacing photographers, designers, or creative direction. It’s about giving teams a system that supports them when traditional production becomes a bottleneck.

AI works best when it operates inside constraints. Mood boards, brand rules, product logic, and camera systems provide those constraints. Without them, AI outputs remain unpredictable. With them, AI becomes reliable.

That reliability is what allows furniture brands to scale visuals without sacrificing quality or control.

Closing

High-quality furniture visuals are no longer defined by how realistic a single image looks. They are defined by how well a system can adapt, repeat, and evolve over time.

The workflow behind the images matters more than the images themselves.

At Studio Prompted, the focus is on building these systems so brands can move faster, stay consistent, and turn AI into a long-term visual asset rather than a short-term experiment.